Now loading...

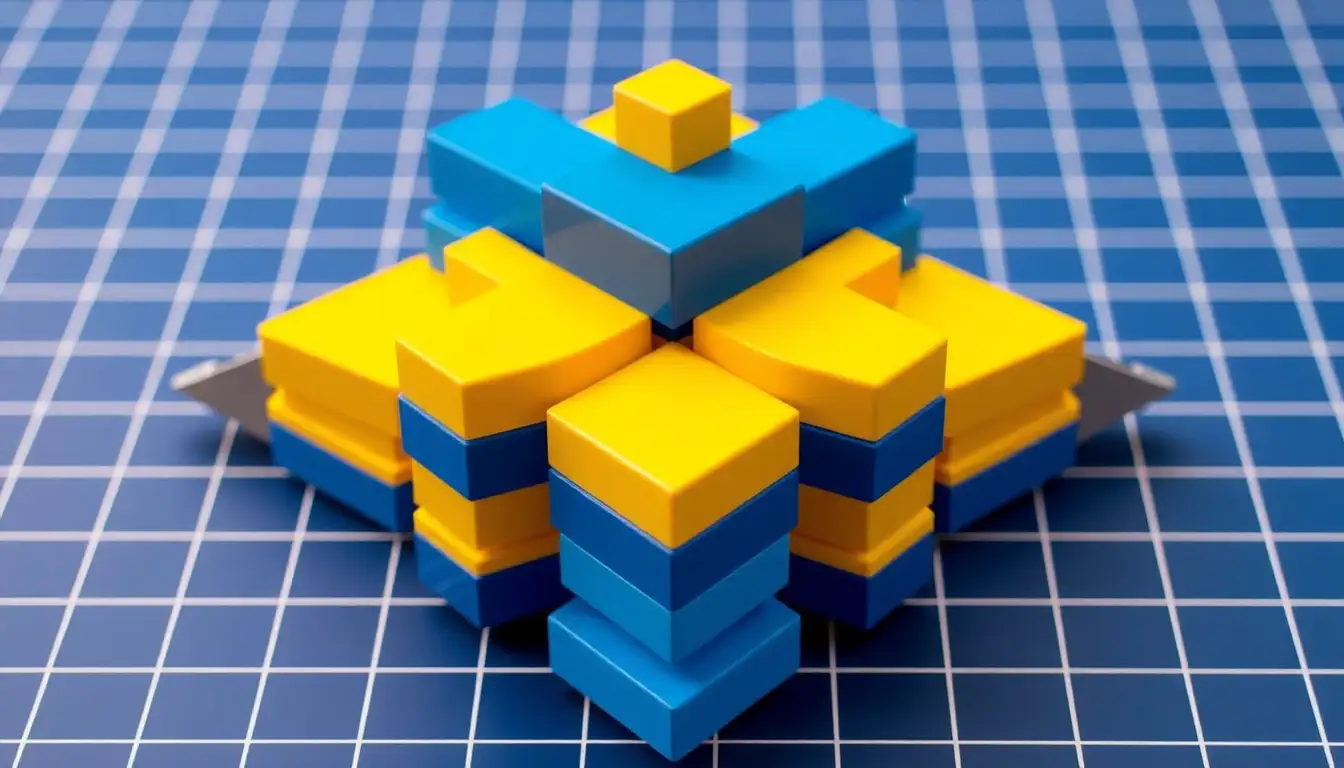

Researchers recently conducted a dual-phase evaluation to assess the capabilities of large language models (LLMs) in a build environment, particularly focusing on creativity, error resilience, and their ability to translate commands into actionable designs. The experiment included both virtual and physical components, wherein the system was tasked with additive manufacturing by placing blocks sequentially to construct a designated structure. A notable aspect of this process is that any errors made, such as incorrectly positioned blocks, must be accounted for rather than removed. This design imposes a unique challenge in validating the LLM’s performance during the build process.

The initial phase involved a quantitative assessment on a 10×10 grid, where fifteen “constrained prompts” were created to generate specific outputs from the LLMs. The aim was to ensure that only one correct answer per prompt could be produced, thus facilitating precise measurements of accuracy. The effectiveness of each LLM’s design was evaluated using an Intersection over Union (IoU) metric, which compared the outputs against manually defined correct answers. Each model underwent five trials for each prompt, and an average IoU score was computed from these responses.

The second phase consisted of a qualitative assessment on a more compact 5×5 grid. In this stage, the researchers issued open-ended design prompts for simple geometric shapes, such as “star,” “trapezoid,” and “right triangle.” Evaluators analyzed the generated designs based on their feasibility and recognizability. Feasibility was measured against adherence to guidelines, including the requirement to use integer coordinate values and respond correctly in JSON format. Recognizability was defined by whether individuals could accurately identify the shapes produced without prior knowledge of the prompts. Human judges rated each design on a three-point scale, where a rating of 1 signaled that the design was both feasible and recognizable, a 2 represented partial fulfillment of the criteria, and a 3 indicated that neither criterion was met.